What is ANSI lumen (lm, lm), unit of measurement? Meaning. Encodings: useful information and a brief retrospective Encodings: useful information and a brief retrospective

ANSI is a character display standard developed by the American National Standards Institute (code 1251). The ANSI standard uses only one byte to represent each character, and is therefore limited to a maximum of 256 characters, including punctuation. Codes 32 to 126 follow the ASCII standard. ASCII (code 688) was used in DOS, ANSI is used in Windows.

Literature

Arkhangelsky A.Ya. Programming in C++ Builder6. Ed. BINOM, 2004.

Arkhangelsky A.Ya. C++Builder6. Reference manual. Moscow, Ed. BINOM, 2004.

Kimmel P. Borland C++5 "BHV-St. Petersburg, 2001.

Klimova L.M. C++ Practical programming. Solution of typical tasks. "KUDITS-IMAGE", M.2001.

Kultin N. С/С++ in tasks and examples. St. Petersburg "BHV-Petersburg", 2003.

Pavlovskaya T.A. C/C++ Programming in a high-level language. Peter, Moscow-St. Petersburg-… 2005

Pavlovskaya T.A., Shchupak Yu.A. C++. Object-oriented programming. Workshop. SPb., Peter, 2005.

Podbelsky V.V. C++ language Finance and statistics, Moscow, 2003.

Polyakov A.Yu., Brusentsev V.A. Methods and algorithms of computer graphics in VisualС++ examples. SPb BHI-Petersburg, 2003

Savitch W. Language C++ course of object-oriented programming. Williams Publishing House. Moscow-St. Petersburg-Kiev, 2001

Wellin S. How Not to Program in C++. "Peter". Moscow-St. Petersburg-Nizhny Novgorod-Voronezh-Novosibirsk-Rostov-on-Don-Yekaterinburg-Samara-Kiev-Kharkov-Minsk, 2004.

Schildt G. The Complete Guide to C++. Ed. House "Williams" Moscow-St. Petersburg-Kiev, 2003.

Schildt G. Self-tutor C/C++. St. Petersburg, BHV-Petersburg, 2004.

Schildt G. Programmer's Guide to C/C++ Ed. House "Williams" Moscow-St. Petersburg-Kiev, 2003.

Shimanovich.L. С/С++ in examples and tasks. Minsk, New knowledge, 2004.

Stern V. Fundamentals of C++. Methods of software engineering. Ed. Lori.

Why is garbage displayed instead of Russian letters in the console application?

and right! You typed the text of the program in the native Visual Studio editor using code page 1251, and the text output in the console application is using code page 866. What to do with this disgrace? As you know, from any stalemate there are at least 3 exits. Let's consider them in order.

Exit 1

Type the text of the program in the editor of any console file manager.

But what about syntax highlighting, displaying help on the selected function using F1, and other little charms that brighten up the bleak life of a simple programmer? No, this is not an option for us.

Exit 2

If you started writing a console program from scratch, it may suit you. Let's rewrite our little masterpiece like this:

|

#include "stdafx.h" #include "windows.h" int main(int argc, char* argv) char s="Hello everyone!"; printf("%s\n", s); |

The keyword here is CharToOem - it is this function that will convert our string to the desired code page. With the output of our program, everything is fine now.

But the next question arises - what to do if you need to recompile your old 100,000-line DOS program written in Borland C++ 3.1 into a Windows console application, in which such a situation occurs in every second line. But you still have to adjust it to the MS compiler, and you also want to optimize a couple of pieces of code ...

Here it probably makes sense to use the knight's move, in the sense

Reg.ru: domains and hosting

The largest registrar and hosting provider in Russia.

Over 2 million domain names in service.

Promotion, mail for domain, solutions for business.

More than 700 thousand customers around the world have already made their choice.

*Mouseover to pause scrolling.

Back forward

Encodings: useful information and a brief retrospective

I decided to write this article as a small review on the issue of encodings.

We will understand what encoding is in general and touch a little on the history of how they appeared in principle.

We will talk about some of their features and also consider the points that allow us to work with encodings more consciously and avoid the appearance on the site of the so-called krakozyabrov, i.e. unreadable characters.

So let's go...

What is an encoding?

Simply put, encoding is a table of character mappings that we can see on the screen, to certain numeric codes.

Those. each character that we enter from the keyboard, or see on the monitor screen, is encoded by a certain sequence of bits (zeros and ones). 8 bits, as you probably know, are equal to 1 byte of information, but more on that later.

The appearance of the characters themselves is determined by the font files that are installed on your computer. Therefore, the process of displaying text on the screen can be described as a constant matching of sequences of zeros and ones to some specific characters that are part of the font.

The progenitor of all modern encodings can be considered ASCII.

This abbreviation stands for American Standard Code for Information Interchange(American standard encoding table for printable characters and some special codes).

This single byte encoding, which originally contained only 128 characters: letters of the Latin alphabet, Arabic numerals, etc.

Later it was expanded (initially it did not use all 8 bits), so it became possible to use not 128, but 256 (2 to 8) different characters that can be encoded in one byte of information.

This improvement made it possible to add to ASCII symbols of national languages, in addition to the already existing Latin alphabet.

There are a lot of options for extended ASCII encoding, due to the fact that there are also a lot of languages in the world. I think that many of you have heard of such an encoding as KOI8-R is also an extended ASCII encoding, designed to work with Russian characters.

The next step in the development of encodings can be considered the appearance of the so-called ANSI encodings.

Essentially they were the same extended versions of ASCII, however, various pseudo-graphic elements were removed from them and typographic symbols were added, for which there were not enough "free spaces" previously.

An example of such an ANSI encoding is the well-known Windows-1251. In addition to typographic symbols, this encoding also included letters of the alphabets of languages close to Russian (Ukrainian, Belarusian, Serbian, Macedonian and Bulgarian).

ANSI encoding is the collective name for. In reality, the actual encoding when using ANSI will be determined by what is specified in the registry of your Windows operating system. In the case of Russian, this will be Windows-1251, however, for other languages, it will be a different kind of ANSI.

As you understand, a bunch of encodings and the lack of a single standard did not bring to good, which was the reason for frequent meetings with the so-called krakozyabry- an unreadable meaningless set of characters.

The reason for their appearance is simple - it is attempt to display characters encoded with one encoding table using another encoding table.

In the context of web development, we may encounter bugs when, for example, Russian text is mistakenly saved in the wrong encoding that is used on the server.

Of course, this is not the only case when we can get unreadable text - there are a lot of options here, especially when you consider that there is also a database in which information is also stored in a certain encoding, there is a database connection mapping, etc.

The emergence of all these problems served as an incentive to create something new. It was supposed to be an encoding that could encode any language in the world (after all, with the help of single-byte encodings, with all the desire, it is impossible to describe all the characters, say, of the Chinese language, where there are clearly more than 256), any additional special characters and typography.

In a word, it was necessary to create a universal encoding that would solve the problem of bugs once and for all.

Unicode - universal text encoding (UTF-32, UTF-16 and UTF-8)

The standard itself was proposed in 1991 by a non-profit organization "Unicode Consortium"(Unicode Consortium, Unicode Inc.), and the first result of his work was the creation of an encoding UTF-32.

Incidentally, the abbreviation UTF stands for Unicode Transformation Format(Unicode Conversion Format).

In this encoding, to encode one character, it was supposed to use as many as 32 bits, i.e. 4 bytes of information. If we compare this number with single-byte encodings, then we will come to a simple conclusion: to encode 1 character in this universal encoding, you need 4 times more bits, which "weights" the file 4 times.

It is also obvious that the number of characters that could potentially be described using this encoding exceeds all reasonable limits and is technically limited to a number equal to 2 to the power of 32. It is clear that this was a clear overkill and wastefulness in terms of the weight of the files, so this encoding was not widely used.

It was replaced by a new development - UTF-16.

As the name implies, in this encoding one character is encoded no longer 32 bits, but only 16(i.e. 2 bytes). Obviously, this makes any character twice "lighter" than in UTF-32, but also twice as "heavier" than any character encoded using a single-byte encoding.

The number of characters available for encoding in UTF-16 is at least 2 to the power of 16, i.e. 65536 characters. Everything seems to be fine, besides, the final value of the code space in UTF-16 has been expanded to more than 1 million characters.

However, this encoding did not fully satisfy the needs of developers. Let's say if you write using exclusively Latin characters, then after switching from the extended version of the ASCII encoding to UTF-16, the weight of each file doubled.

As a result, another attempt was made to create something universal, and this something has become the well-known UTF-8 encoding.

UTF-8- this multibyte character encoding with variable character length. Looking at the name, you might think, by analogy with UTF-32 and UTF-16, that 8 bits are used to encode one character, but this is not so. More precisely, not quite so.

This is because UTF-8 provides the best compatibility with older systems that used 8-bit characters. To encode a single character in UTF-8 is actually used 1 to 4 bytes(hypothetically possible up to 6 bytes).

In UTF-8, all Latin characters are encoded with 8 bits, just like in ASCII encoding.. In other words, the basic part of the ASCII encoding (128 characters) has moved to UTF-8, which allows you to "spend" only 1 byte on their representation, while maintaining the universality of the encoding, for which everything was started.

So, if the first 128 characters are encoded with 1 byte, then all other characters are already encoded with 2 bytes or more. In particular, each Cyrillic character is encoded with exactly 2 bytes.

Thus, we got a universal encoding that allows us to cover all possible characters that need to be displayed without "heavier" files unnecessarily.

With BOM or without BOM?

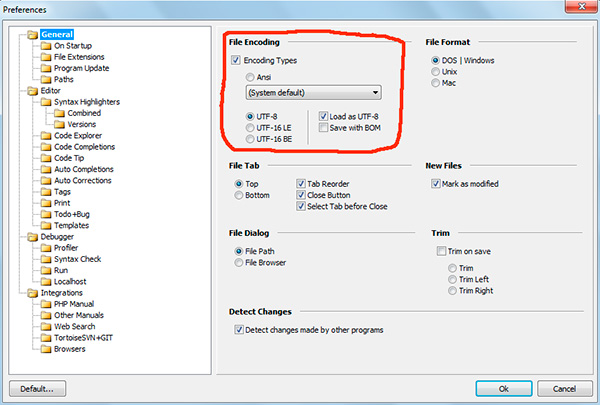

If you have worked with text editors (code editors) such as Notepad++, phpDesigner, rapid PHP etc., then they probably paid attention to the fact that when setting the encoding in which the page will be created, you can usually choose 3 options:

ANSI

-UTF-8

- UTF-8 without BOM

I must say right away that it is always worth choosing the last option - UTF-8 without BOM.

So what is BOM and why don't we need it?

BOM stands for Byte Order Mark. This is a special Unicode character used to indicate the byte order of a text file. According to the specification, its use is optional, but if BOM is used, it must be set at the beginning of the text file.

We will not go into the details of the work BOM. For us, the main conclusion is the following: using this service character together with UTF-8 prevents programs from reading the encoding normally, resulting in script errors.

American National Standards Institute(English) A merican n ational s tandards i institute, ANSI) is an association of American industrial and business groups that develops trade and communication standards. He is a member of ISO and IEC , representing US interests there .

History

ANSI was originally formed in 1918 when five engineering societies and three government agencies founded the "American Engineering Standards Committee" ( AESC- English. American Engineering Standards Committee). In 1928 the committee became known as the American Standards Association. ASA- English. American Standards Association). In 1966, the ASA was reorganized and became the "Standards Institute of the United States of America" ( USASI- English. United States of America Standards Institute). The current name was adopted in 1969.

Until 1918, there were five engineering societies involved in the development of technical standards:

- American Institute of Electrical Engineers (AIEE, now IEEE)

- American Society of Mechanical Engineers (ASME)

- American Society of Civil Engineers (ASCE)

- American Institute of Mining Engineers (AIME, now the American Institute of Mining, Metallurgical and Petroleum Engineers)

- American Society for Testing and Materials (now ASTM)

In 1916, the American Institute of Electrical Engineers (now IEEE) took the initiative to bring these organizations together to form an independent national body to coordinate standards development, harmonization, and approval of national standards. The above five organizations became the main members of the United Engineering Society (United Engineering Society - UES), subsequently the US War Department, the Navy (merged in 1947 to become the US Department of Defense) and Commerce were invited to participate as founders.

In 1931, the organization (renamed ASA in 1928) became part of the US National Committee of the International Electrotechnical Commission (IEC) which was formed in 1904 to develop standards in electrical and electronic engineering

Members

ANSI members include government agencies, organizations, academic and international organizations, and individuals. In total, the Institute represents the interests of more than 270,000 companies and organizations and 30 million professionals worldwide /

Activity

Although ANSI itself does not develop standards, the Institute oversees the development and use of standards by accrediting the procedures of standards developing organizations. ANSI accreditation means that the procedures used by standards developing organizations meet the Institute's requirements for openness, balance, consensus, and due process.

ANSI also designates specific standards as American National Standards, or ANS, when the Institute determines that the standards were developed in an environment that is fair, accessible, and responsive to the needs of various stakeholders.

International activity

In addition to US standardization activities, ANSI promotes the international use of US standards, advocates US political and technical position in international and regional standards organizations, and encourages the adoption of international standards as national standards.

The Institute is the official US representative in two major international standards organizations, the International Organization for Standardization (ISO) as a founding member, and the International Electrotechnical Commission (IEC) through the US National Committee (USNC). ANSI participates in almost the entire technical program of ISO and IEC and manages many key committees and subgroups. In many cases, US standards are submitted to ISO and IEC via ANSI or USNC, where they are accepted in whole or in part as International Standards.

Acceptance of ISO and IEC standards as US standards increased from 0.2% in 1986 to 15.5% in May 2012.

Directions of standardization

The Institute manages nine standardization groups:

- ANSI Homeland Defense and Security Standardization Collaborative (HDSSC)

- ANSI Nanotechnology Standards Panel (ANSI-NSP - ANSI Nanotechnology Standards Panel)

- ID Theft Prevention and ID Management Standards Panel (IDSP - ID Theft Prevention and ID Management Standards Panel)

- ANSI Energy Efficiency Standardization Coordination Collaborative (EESCC)

- Nuclear Energy Standards Coordination Collaborative (NESCC-Nuclear Energy Standards Coordination Collaborative)

- Electric Vehicles Standards Panel (EVSP)

- ANSI-NAM Network on Chemical Regulation

- ANSI Biofuels Standards Coordination Panel

- Healthcare Information Technology Standards Panel (HITSP)

- American Piping and Machinery Certification Agency

Each of the groups is engaged in identifying, coordinating and harmonizing voluntary standards related to these areas. In 2009, ANSI and (NIST) formed the Nuclear Energy Standards Coordinating Collaboration (NESCC). NESCC is a collaborative initiative to identify and meet the current need for standards in the nuclear industry.

Standards

Of the standards adopted by the institute, the following are known:

Contrary to popular misconception, ANSI did not adopt the 8-bit code page standards, although it was involved in the development of the ISO-8859-1 encoding and possibly some others.

Notes

- About ANSI

- RFC

- ANSI: Historical overview (indefinite) . ansi.org. Retrieved 31 October 2016.

- History of ANSI

Often in web programming and layout of html pages, you have to think about the encoding of the edited file - after all, if the encoding is selected incorrectly, then there is a chance that the browser will not be able to automatically determine it and as a result the user will see the so-called. "Krakozyabry".

Perhaps you yourself have seen strange symbols and question marks instead of normal text on some sites. All this occurs when the encoding of the html page and the encoding of the file itself of this page do not match.

At all, what is text encoding? It's just a set of characters, in English "charset" (character set). It is needed in order to convert text information into data bits and transmit, for example, via the Internet.

Actually, the main parameters that distinguish encodings are the number of bytes and the set of special characters into which each character of the source text is converted.

Brief history of encodings:

One of the first to transmit digital information was the appearance of the ASCII encoding - American Standard Code for Information Interchange - American standard code table, adopted by the American National Standards Institute - American National Standards Institute (ANSI).

These abbreviations can be confusing. For practice, it is important to understand that the initial encoding of the created text files may not support all the characters of some alphabets (for example, hieroglyphs), so there is a tendency to move to the so-called. standard Unicode (Unicode), which supports universal encodings − utf-8, utf-16, utf-32 and etc.

The most popular Unicode encoding is Utf-8. Usually, site pages are now typeset in it and various scripts are written. It allows you to easily display various hieroglyphs, Greek letters and other conceivable and inconceivable characters (character size up to 4 bytes). In particular, all WordPress and Joomla files are written in this encoding. And also some web technologies (in particular, AJAX) are only able to process utf-8 characters normally.

Set the encodings of a text file when creating it with a regular notepad. Clickable

In Runet, you can still find sites written with the expectation of encoding Windows-1251 (or cp-1251). This is a special encoding designed specifically for Cyrillic.

Basically "ANSI" refers to the legacy code page in Windows. See also on this topic. The first 127 characters are identical to ASCII in most code pages, however the top characters are different.

However, ANSI automatically not stands for CP1252 or Latin 1.

Despite all the confusion, you should just avoid such issues for the time being and use Unicode.

What is the ANSI encoding format? Is this the default system format? How is it different from ASCII?

At one time, Microsoft, like everyone else, used 7-bit character sets, and they came up with their own when they suited them, although they kept ASCII as the main subset. They then realized that the world had moved to 8-bit encodings and that there were international standards such as the ISO-8859 family. In those days, if you wanted an international standard and you lived in the US, you bought it from the American National Standards Institute ANSI, which reissued the international standards with their own branding and numbers (that's because the US government wants American standards, and not international standards). So the Microsoft ISO-8859 copy said "ANSI" on the cover. And because Microsoft wasn't very used to standards in those days, they didn't realize that ANSI had published a lot of other standards. So they referred to the ISO-8859 family of standards (and the variants they invented because they didn't understand the standards in those days) by the cover title "ANSI" and it found its way into Microsoft's user documentation and therefore into the community. users. This was about 30 years ago, but you still hear the name occasionally today.

Or you can query your registry:

C:\>reg query HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Nls\CodePage /f ACP HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Nls\CodePage ACP REG_SZ 1252 End of search: 1 match(es) found. C:\>

When using single-byte characters, the ASCII format defines the first 127 characters. Extended characters from 128-255 are defined by various ANSI codes to provide limited support for other languages. To understand ANSI encoding, you need to know what code page it uses.

Technically, ANSI should be the same as US-ASCII. It refers to the ANSI X3.4 standard, which is simply the ANSI organization's approved version of ASCII. The use of upper bit characters is not defined in ASCII/ANSI as it is a 7-bit character set.

However, years of misuse of the term by DOS and subsequently by the Windows community have left their practical meaning as "the system code page of whatever machine it is." The system code page is also sometimes known as "mbcs" as in East Asian systems, which can be an encoding with multiple bytes per character. Some code pages may even use top bit bytes as bytes of bytes in a multibyte sequence, so it's not even strictly compatible with plain ASCII... but even then it's still called ANSI.

In US and Western Europe default settings, "ANSI" maps to Windows code page 1252. This is not the same as ISO-8859-1 (although it's pretty similar). On other machines it could be anything. This renders ANSI completely useless as an external encoding identifier.

I remember when ANSI text was referring to pseudo-VT-100 escape codes used in DOS via the ANSI.SYS driver to change the stream text flow.... Probably not what you're talking about, but if it sees

What do page out errors indicate?

What do page out errors indicate? Synchronous and asynchronous I/O Asynchronous I/O

Synchronous and asynchronous I/O Asynchronous I/O Finite state machines, how to program without messing up

Finite state machines, how to program without messing up